Sunday morning, sun softly touching me while I am sitting behind my laptop at the other end of our kitchen table. Time to write the post that has been on my mind for quite a while. A topic partly born out of amazement. An amazement triggered every once in a while when hearing fellow professionals saying that it does not make sense to run the standard tests [1] on your solution. Our daily practice shows different for already more than three years. That’s what I would like to share with you below.

Why we run standard tests

The MS test set, provided as part of the product, is a humongous collateral of tests. When it first became part of the product in NAV 2016 these were 16,000+ tests and the number has been growing ever since with each minor and major release, altogether being more 22,000 tests nowadays. This collateral covers all standard application areas, like G/L, Sales, and Purchase. Now, the previous description implicitly holds two major reasons why we started to adopt the standard test as part of our test automation:

- our solution extends a major part of the standard application, specifically being the areas as mentioned above, so almost unavoidably, running standard tests that cover these areas, will hit our code

- these potentially thousands of tests hitting our code are for free saving us a near to unimaginable amount of time [2]

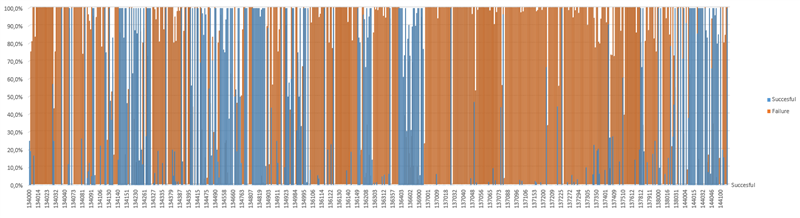

As saying goes the proof is in eating the pudding, thus we first ran the standard tests on our code exactly as I described in this post. Seeing the result of this first run my first thought was one of dismay as only a good 22 % of the tests ended successful. On second thought, however, this showed to be a great result as it meant that the gross amount of MS tests, i.e. 77 %, did hit (some of) our code so these are, to say the least, tests that do matter to our solution. Getting these working would mean we would have a nice number of tests validating (part of) our code.

Overall relative result of our first test run, showing in orange the approx. 77 % failures.

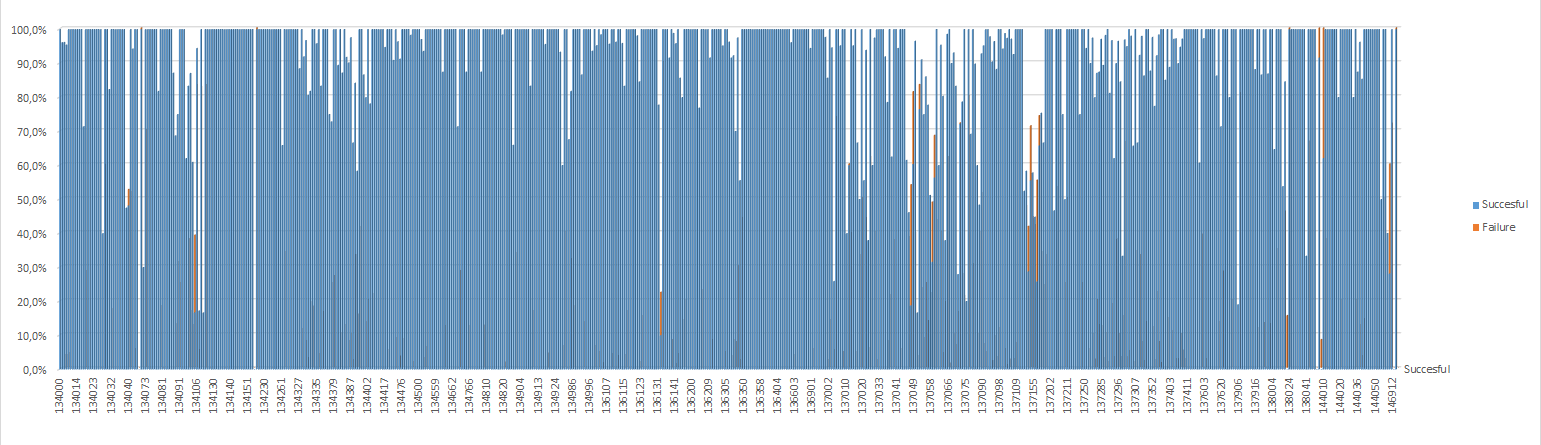

What did we do

First of all we dreamed that we could get these tests running on our code in a couple of weeks: walk like Little Thumb with the Ogre’s seven-league boots as we say. Secondly we found a way to make big jumps with as little as effort to get them working. It’s what I call a statistical approach: load the test results in Excel, using a number of pivot tables list the most occurring errors, analyze their cause and find a simple, generic solution. It turned out that in approx. 90 % of the failures the fix was to update the data in the database under test. Meaning that we just needed to provide some extra shared fixture. In this post I talked about fixture, and more specifically shared fixture, and how we got that extended for the standard tests by hooking into the MS Initialize functions. After a first full week of work by one FTE the total success rate was lifted to 72 % (from the already mentioned good 22 %), a second week raised this to almost 80 %, where, after an additional 4 weeks, eventually the meter stopped at 90 %. An effort of 6 weeks of work by one person (spread over a couple of months) yielded approx. 12,500 tests that we could add to our test collateral.

Overall relative result of our test run after 6 weeks of work , showing in blue the approx. 90 % passing test.

What did we gain

6 Weeks of 1 FTE work for 12,500 tests might sound impressive given the time it would take to get 12,500 tests created ourselves. But the sheer fact of a great number of tests should not be a goal on its own. The goal is to get a useful collection of tests that can support us in our daily work, showing that, by rerunning them on a regular base, our existing functionality is still doing what we expect it to do.

So what did we gain getting that massive number of tests at our disposal?

Our statistical approach intrinsically did disregard any detailed knowledge of the standard tests. Knowing these did cover the various standard application areas, we assumed these would be meaningful to us. Seeing the vast part of tests fail this was a first indication our assumption made sense. Having used these tests for our nightly test run in the last 3 years, we only can but confirm these 12,500 tests [3] have been of great value. It has saved us many times from after go-live bugs, being already detected by our automated tests, not in the least due to its greater reach than manual testing. It also brought our development focus, including manual testing, to a next level as we can trust on the nightly test run. Parallel to his move we did leverage our development practice with the introduction of Azure DevOps pipelines to automate builds and deployments. I guess it has never been a secret that I am a huge fan of this. All these efforts brought us very close to continuous delivery. It’s actually more than very close as we could update or production environment any time if needed. For practical reasons we normally update in the weekend.

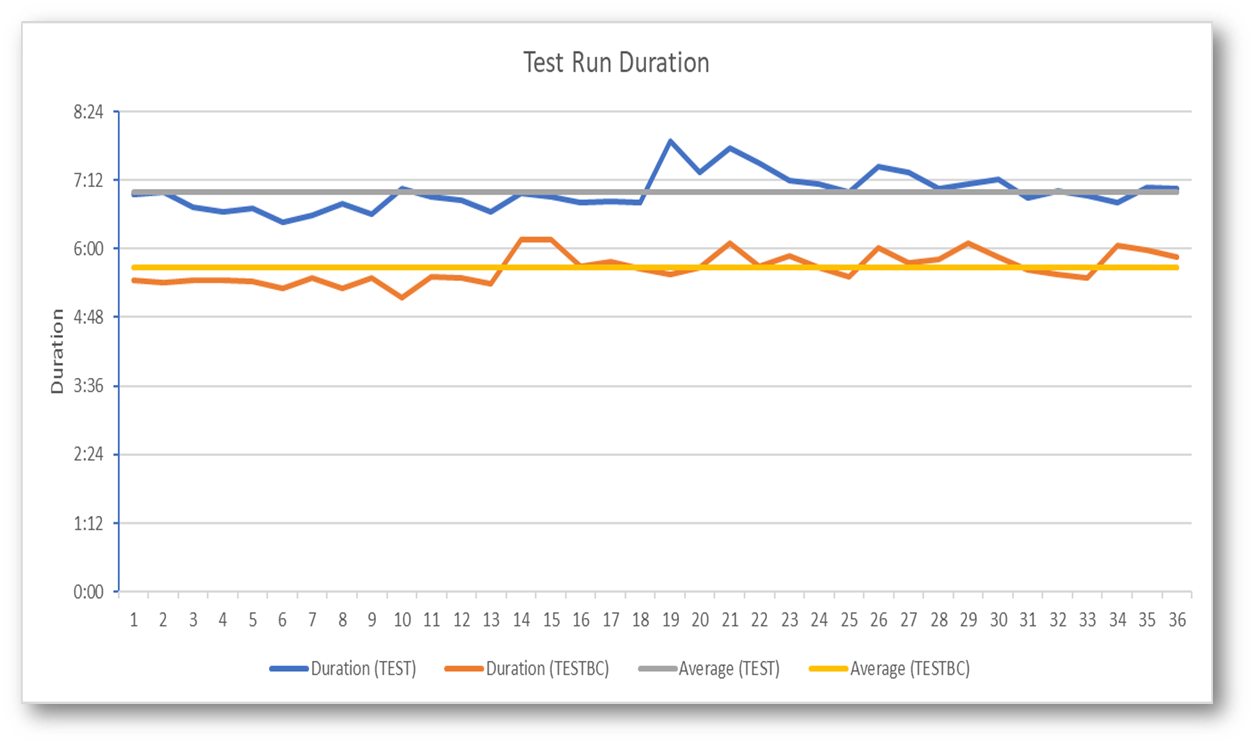

Being one of the first end-user companies to go live at the start of 2018 on NAV 2018, coming from NAV 2016, was highly dependent on our test collateral. We couldn’t have achieved this in this short while without. It again showed its value on the technical upgrade to BC 14 we have been investigating recently where the lead time showed significantly lower on BC 14 than on NAV 2018. [4]

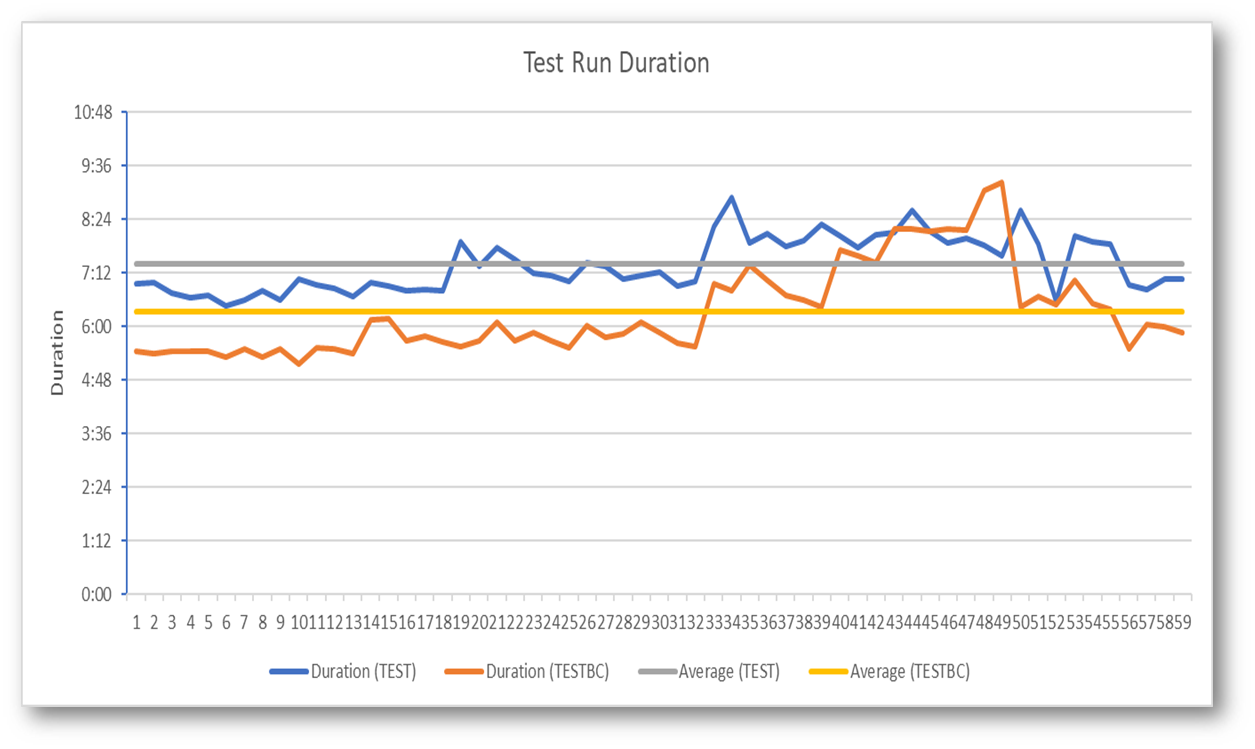

Test run lead time registered for a number of runs on NAV 2018 (blue/grey) and BC 14 platform (orange/yellow).

Conclusion

Without a doubt, incorporating standard tests in our daily work has tremendously helped us:

- improve the quality of our solution

- leverage our development practice

- grow the development throughput

I reckon you can (now) understand the amazement I uttered above. Unless your solution is fully independent of the standard, I honestly do not get why you should not make use of standard MS tests. You’re selling yourself short by not doing this at all. Even in the context of a simple, small extension, like the LookupValue business case I used in my book, its code is being touched by more than 3,000 tests on BC 14!

BTW: learn more about how to get MS tests working on your solution from my book and by joining my online crash course Test Automation, of which a 5th run will start on May 25 (still some seats available).

Footnotes

[1] With standard tests I refer to the tests provided by MS as part of their product.

[2] A simple calculus I display during my workshops is the following: let’s say, having achieved a certain degree of experience on writing automated test, you would be able to create one automated test in 10 minutes (which is not that unimaginable), so 6 per hour and 7 hours per day. Getting to 20,000 tests would take you, as one person, almost 3 years to get that done.

[3] These 12,500 on NAV 2016 has grown to almost 14,000 test on NAV 2018.

[4] While working on this project all of sudden the lead time did increase dramatically, only to recover about 2 weeks when we finally found out that a Windows Server update that was rolled out just before the lead time increase did contain a bug. Once fixed the lead time was back to “normal”. See how the graph did notify us.

Test run lead time increase due to a bug in Windows Server update. Note that the increase was more dramatic on BC 14 (orange/yellow) than on NAV 2018 (blue/grey). We never found out what caused the difference.